Written by Ryan McGuine //

In August, Martin Ravallion wrote a working paper for the Center for Global Development titled Should the Randomistas (Continue to) Rule? questioning the wisdom of allowing proponents of randomized control trials (RCTs) to dictate the development research agenda. This came after a collection of 15 renowned economists wrote an open letter criticizing development buzzwords, including but not limited to RCTs, in July. Just what are RCTs, though, and how did they come to dominate development research?

Prior to about a decade ago, the story of foreign aid had mostly been one of smart people in New York or Washington inventing solutions that mathematically fixed observed problems in poor countries. Massive projects that could last years and cost millions were quickly scaled up in an attempt to get poor people to do what westerners determined was best for them. If the project failed to achieve the desired results, common recipients of blame included the lack of will (read: money) on the part of donors, or even the program’s existence for preventing people from seeking their own solutions. In this way, the west funded at least as many botched interventions as successful ones.

One famous failure is Jeffrey Sachs’ Millennium Villages Project (MVP), which featured injecting half a million dollars per year into each participating village. The MVP was based on the idea that poor countries need only an initial jolt of capital to escape the “poverty trap” — that is, people are poor today because they were born poor. However, the MVP naïvely considered African community economies as machines that respond predictably to interventions, rather than complex ecosystems with emergent properties that are difficult to anticipate. Most villages looked the same after the jolt as before. Additionally, the MVP failed to institute controls to compare villages that received aid to those which did not, so even if the interventions yielded positive outcomes, there could be no way of quantifying the comparative difference to any other community.

PlayPumps International is another notorious aid blunder. The initiative featured installing water pumps that draw water from wells via playground merry-go-rounds in poor communities in the developing world, and received endorsements from celebrities including Laura Bush, Bill Clinton, and Jay Z. It was assumed that children would play on them, thus making life easier on the women in the village, who are traditionally the ones tasked with collecting water. Once again, this project both vastly simplified local conditions with unrealistic assumptions, and quickly scaled up an intervention without proven impacts. It did not take long for children to grow tired of the equipment, and women were left walking in circles for water, which proved a much more tedious way of retrieving water than the original hand pumps.

Only relatively recently has there been serious work done to discern which interventions actually result in improvements to well-being, and which do so for the least cost. The tool that has gained perhaps the most traction is the RCT. Utilized by the medical community for years to determine the effectiveness of drugs, in the context of development, they aim to quantify the impact of a particular intervention designed to reduce poverty. The idea is simple: divide a given population into two groups using a randomized action like the flip of a coin or cast of a die. One group receives the intervention while the other does not, and at the end of the prescribed time period, the condition of both groups is evaluated.

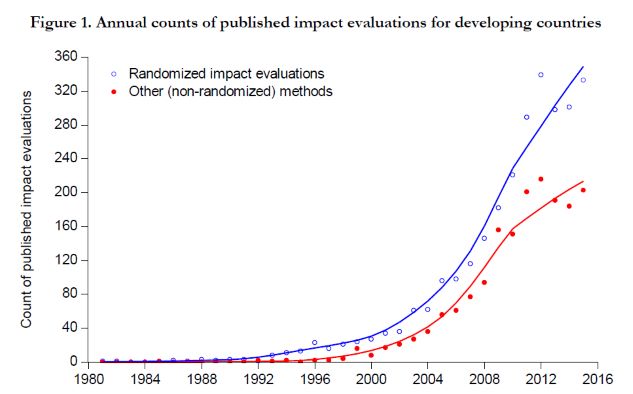

RCTs are often touted as the “gold standard” of impact evaluation. Compared to most other econometric methods, RCTs rely on fewer assumptions, produce results that are clearer to interpret, and reduce selection bias (statistics-speak for an experimental sample chosen in a manner that represents a certain characteristic of the overall population disproportionately, skewing the evaluation’s results). The number of RCTs conducted over the last two decades has increased massively, illustrated by the plot below, and RCTs are now nearly synonymous with the entire field of development economics.

Source: Martin Ravallion (2018), Should the Randomistas (Continue to) Rule?

The accumulation of much empirical evidence via RCTs has produced a relatively coherent theory of poverty — how the extremely poor, defined as those earning below the World Bank’s poverty line of $1.90/day, make decisions and what kinds of interventions make them less poor. Much of that evidence is presented in Abhijit Banerjee and Esther Duflo’s book Poor Economics, which seeks not to determine “whether aid is good or bad, but whether particular instances of aid did some good or not.” Insofar as RCTs have enabled important contributions to said theory of poverty, there is little if any contention. Rather, the question is whether RCTs deserve to occupy such an exalted position in academia, given that they necessarily crowd out a lot of other research by doing so.

One argument against RCTs’ favored rank is presented by Lant Pritchett in an episode of the podcast EconTalk. In the episode, he argued that investing time and resources into determining how to move the poorest of the poor above a discrete level of poverty is a substantial academic misallocation. Determining the conditions for countries to adopt growth-promoting policies has been shown to produce enormously higher returns than determining the most effective micro-interventions. Mr Pritchett uses Asian countries to demonstrate that the largest poverty reductions in recent history have occurred where governments have adopted measures that increased productivity of the whole economy, including the productivity of the poor, while receiving little in the way of formal aid. Rather than poverty reduction interventions, these countries paired export-oriented industrialization strategies focused on sectors where they had comparative advantages, with minimal, selective interventions to guide resource allocation so as to address market failures.

The 15 economists who wrote the July letter criticize RCTs as too expensive to conduct prior to implementing every policy, as well as too abstract to provide useful information about an intervention’s effectiveness in the real world. Further, they go on to criticize overzealous advocates of “aid effectiveness” more generally for focusing too much on measurable short-term results while ignoring “the broader macroeconomic, political, and institutional drivers of impoverishment and underdevelopment.” This can lead to interventions that help marginally, but fail to address the underlying poverty-creating systems, leaving fundamental causes of development unaddressed.

Given these arguments, one might wonder whether RCTs have a role to play at all in the development economics research agenda. Chris Blattman provided one convincing argument in favor of the method in another EconTalk episode. According to Mr Blattman, the society we live in has collectively decided that people in rich countries are going to spend a lot of money trying to make the lives of people in poor countries better. The reasons might vary — some feel as though there is a moral imperative to “pay it forward” by helping the least among us, while others prefer arguments about bolstering security in strategic regions. Almost without exception, poverty-reduction programs can be improved on the margins. Continuing to dump money into programs that might help the situation, but probably do not help as much as they could, is not only unfair to the taxpayers of donor countries, but represents a huge missed opportunity to maximize the positive impact of money spent.

This idea of incremental adjustments as a way to make beneficial change is gaining prominence beyond the sphere of aid as well. In the book Building State Capacity, Matt Andrews, Michael Woolcock, and Mr Pritchett suggest that beginning with locally-identified problems and designing situation-specific strategies to address those problems is a better approach than what they refer to as “isomorphic mimicry” — establishing institutions that appear to be similar to those established in the west, but do not actually do the same thing. Similarly, in Poor Economics, Mr Banerjee and Miss Duflo suggest that rather than starting with sweeping change to institutions, focusing on concrete, measurable programs can slowly chip away at “the three I’s of of development policy” — ideology, ignorance, and inertia — to spur virtuous cycles that lead to emergent institutional change. Thus, there is certainly reason enough to continue conducting RCTs, though their proper place is probably one of many tools available to a development economist, rather than the top of a hierarchy of methods.